Unless stated otherwise, please do not use my likeness for legal proceedings on the event of my untimely passing. Please.

It’s too late. There’s like fifty Tetris games.

damn

This is some Black Mirror level shit.

This is awesome. Next we can have AI Jesus endorsing Trump, AI Nicole Simpson telling us who the real killer was, and AI Abraham Lincoln saying that whole Civil War thing was a big misunderstanding and the Confederacy was actually just fine. The possibilities are endless. I can hardly wait!

I didn’t know there was a Nicole Simpson, I thought there were just Homer, Marge, Abe, Bart, Maggie, and Lisa Simpson.

Abe statement is probably closer to real than you might imagine.

Removed by mod

thanks, I feel dizzy now

AI-plim says they’re just fine with it and everybody knows AI only presents objective truth.

I’d rather have somebody puppet my corpse like in Weekend at Bernie’s. Basically the same thing but more authentic

This is some Weekend at Bernie’s 2 shit.

Why even do an impact statement? All Christian victims should be assumed to forgive their attackers, right?

The fuck is wrong with people.

Removed by mod

An AI version of Christopher Pelkey appeared in an eerily realistic video to forgive his killer… “In another life, we probably could’ve been friends. I believe in forgiveness, and a God who forgives.”

The message was well-received by Judge Todd Lang, who told the courtroom, “I love that AI."

How does that even make sense?

Wouldn’t you lower the sentence if the victim AI says it forgives the killer? Because - you know - it significantly reduces the “revenge” angle the American justice system is based on?

Removed by mod

Society is on the verge of total collapse

Frankly any society that embraces this sort of thing should collapse, because the alternative is too disturbing.

An AI version of Christopher Pelkey appeared in an eerily realistic video to forgive his killer… “In another life, we probably could’ve been friends. I believe in forgiveness, and a God who forgives.”

“…and while it took my murder to get my wings as an angel in heaven, you still on Earth can get close with Red Bull ™. Red Bull ™ gives you wings!” /s

This bring up an interesting question I like to ask my students about AI. A year or so ago, Meta talked about people making personas of themselves for business. Like if a customer needs help, they can do a video chat with an AI that looks like you and is trained to give the responses you need it to. But what if we could do that just for ourselves, but instead let an AI shadow us for a number of years so it essentially can mimic the language we use and thoughts we have enough to effectively stand in for us in casual conversations?

If the murdered victim in this situation had trained his own AI in such a manner, after years of shadowing and training, would that AI be able to mimic its master’s behavior well enough to give its master’s most likely response to this situation? Would the AI in the video have still forgiven the murderer, and would it hold more significant meaning?

If you could snapshot you as you are up to right now, and keep it as a “living photo” A.I. that would behave and talk like you when interacted with, what would you do with it? If you could have a snapshot AI of anyone in the world in a picture frame on your desk, who you could talk to and interact with, who would you choose?

it would hold the same meaning as now, which is nothing.

this is automatic writing with a computer. no matter what you train on, you’re using a machine built to produce things that match other things. the machine can’t hold opinions, can’t remember, can’t answer from the training data. all it can do is generate a plausible transcript of a conversation and steer it with input.

one person does not generate enough data during a lifetime so you’re necessarily using aggregated data from millions of people as a base. there’s also no meaning ascribed to anything in the training data. if you give it all a person’s memories, the output conforms to that data like water conforms to a shower nozzle. it’s just a filter on top.

in regards to the final paragraph, i want computers to exhibit as little personhood as possible because i’ve read the transcript of the ELISA experiments. it literally could only figure out subject-verb-object and respond with the same noun as it was fed and people were saying it should replace psychologists.

The deceased’s sister wrote the script. AI/LLMs didnt write anything. It’s in the article. So the assumptions you made for the middle two paragraphs dont really apply to this specific news article.

i was responding to the questions posted in the comment i replied to.

also, doesn’t that make this entire thing worse?

also, doesn’t that make this entire thing worse?

No? This is literally a Victim Impact Statement. We see these all the time after the case has determined guilt and before sentencing. This is the opportunity granted to the victims to outline how they feel on the matter.

There have been countless court cases where the victims say things like “I know that my husband would have understood and forgiven [… drone on for a 6 page essay]” or even done this exact thing, but without the “AI” video/audio (home videos with dubbed overlay of a loved one talking about what the deceased person would want/think about it). It’s not abnormal and has been accepted as a way for the aggrieved to voice their wishes to the court. All that’s changed here was the presentation. This didn’t affect the finding of if the person was guilty as it was played after the finding and was only played before sentencing. This is also the customary time where impact statements are made. The “AI” didn’t make the script. This is just a mildly fancier impact statement and that’s it. She could have dubbed it over home video with a fiverr voice actor. Would that change how you feel about it? I see no evidence that the court treated this anything different than any other impact statement. I don’t think anyone would be fooled that the dead person is magically alive and directly making the statement. It’s clear who made it the whole time.

i had no idea this was a thing in american courts. it just seems like an insane thing to include in a murder trial

Removed by mod

Legally speaking, this was a victim impact statement.

Convicted criminals have long had the common law right of allocution, where they can say anything they want directly to the judge before sentence is passed.

Starting a few decades ago, several states decided that the victims of crime should have a similar right to address the judge before sentencing. And so the victim impact statement was created.

It’s not evidence, and it’s not under oath, but it is allowed to influence the sentencing decision.

(Of course, victim impact statements are normally given by real victims).

Was written and created by the family… they are victims. they just wrote it in the context of the deceased.

Do you mean that the script was written by the family, and it was only “performed” by generative AI? That’s very interesting, and not something I heard anywhere else.

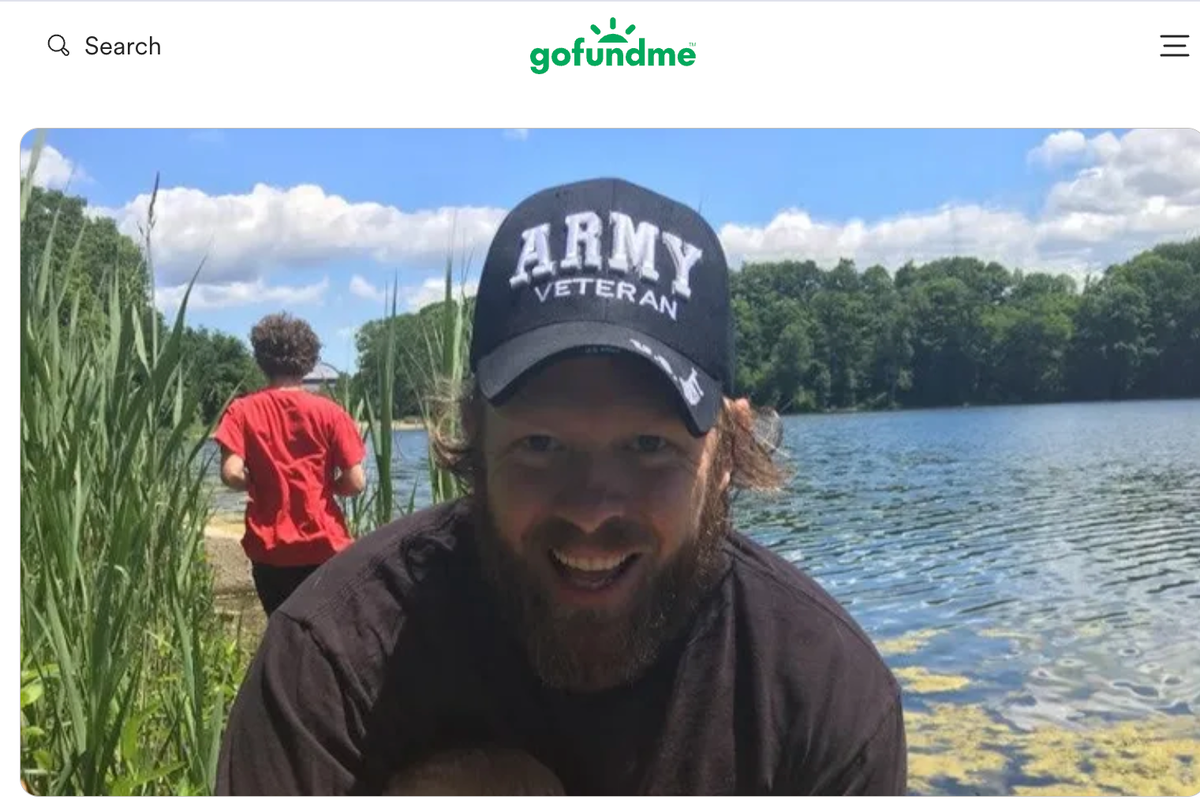

The 37-year-old Army combat veteran’s family created the AI statement using a previously recorded video, a picture and a script written by the victim’s sister, Stacey Wales.

“I said, ‘I have to let him speak,’ and I wrote what he would have said, and I said, ‘That’s pretty good, I’d like to hear that if I was the judge,’” Wales told AZFamily.

From the article. Where Wales is his sister.

Wales herself is not ready to forgive Horcasitas, but when she wrote the script, she says she knew her brother would speak of forgiveness. “He stood for people, and for God, and for love,” she says.

From a related article. https://www.azfamily.com/2025/05/06/chandler-road-rage-shooting-victim-speaks-using-artificial-intelligence/

The outrage in the comments about this is stupid. It’s clear that this is an impact statement from the family… the “AI” used here was to just generate the image of him reading the impact statement that his sister wrote.

I love AI more than anything in the world!

- Saik0

I too enjoy putting words into mouths of other people and call it a method of getting my own argument across.

Waaah cry more!

I added to the conversation by answering the question directly asked. Which someone who read the article would have known the answer for.

I’m sorry that reading comprehension is hard for you.

Acting like more than half of the comments on this post aren’t arguing in bad faith and thinking that the AI generated everything wholesale (content and script included) just shows how delusional you all are.

Edit: The funny part is that mkwt, the person I replied to, updooted the post. meaning that they legitimately found that information useful since they went out of their way to updoot. So you, and the other 5 people who don’t understand how to read, can pound sand.

Honestly, if I’m the defense, this has gotta be awesome, right? Now, I’m not a lawyer, but I have watched Boston Legal twice, so that’s basically the same thing, and what I’m hearing is these people want to get up on the stand and show the jury a video which either:

A) to the particularly inattentive, shows the victim clearly alive, or

B) demonstrates that even video evidence can be completely fabricated from whole cloth, and the opposition is more than capable of doing so to serve their own interests

Barring the staggeringly unlikely event that the defendant goes full-on Perry Mason Perp and outright says “hey, sorry I killed you, man” to the hologram, this seems like a pretty sweet deal.

B) demonstrates that even video evidence can be completely fabricated from whole cloth, and the opposition is more than capable of doing so to serve their own interests

It’s a victim impact statement. It’s not evidence. Victim Impact Statements are provided/read/whatever AFTER the finding of guilt, but before sentencing.

The judge was so moved by a call for forgiveness that he increased the recommended sentence… Or if that’s not the case, that’s some poor writing in the article

Rape and stupid nonsense…that is all ai is.

There is absolutely zero chance I would allow anyone to theorize what they think I would say using AI. Hell, I don’t like AI in its current state, and that’s the least of my issues with this.

It’s immoral. Regardless of your relation to a person, you shouldn’t be acting like you know what they would say, let alone using that to sway a decision in a courtroom. Unless he specifically wrote something down and it was then recited using the AI, this is absolutely wrong.

It’s selfish. They used his likeness to make an apology they had no possible way of knowing, and they did it to make themselves feel better. They couldve wrote a letter with their own voices instead of turning this into some weird dystopian spectacle.

“It’s just an impact statement.”

Welcome to the slippery slope, folks. We allow use of AI into courtrooms, and not even for something cool (like quickly producing a 3d animation of a car accident for use in explaining—with actual human voices—what happened at the scene). Instead, we use it to sway a judge’s sentencing, while also making an apology on behalf of a dead person (using whatever tech you want because that is not the main problem here) without their consent or even any of their written (you know, like in a will) thoughts.

Pointing to “AI bad” for these arguments is lazy, reductive, and not even remotely the main gripe.

allow use of AI into courtrooms

Surprised the judge didn’t kick that shit to the curb. There was one case where the defendant made an AI avatar, with AI generated text, to represent himself and the judge said, “Fuck outta here with that nonsense.”

Removed by mod

Removed by mod

Why would a judge allow this? It’s like showing the jury a made-for-TV movie based on the trial they’re hearing.

Not only did he allow it,

While the state asked for a nine-and-a-half year sentence, the judge handed Horcasitas a 10-and-a-half year sentence after being so moved by the video, Pelkey’s family said, noting the judge even referred to the video in his statement.

It has about as much evidentiary value as a ouija board, but since the victim was a veteran and involved with a church and the judge likes those things we can ignore pesky little things like standards of proof and prejudice

Fucking yikes that judge needs to be removed

Twist: the judge used AI to write his sentencing statement. It’s chat bots all the way down.

A horror story in two sentences.

I’ll take ten AI simps over one MAGA ally judge.

Seems like grounds for a mistrial…

On the other hand I do like that some road rage dipshit got a long sentence

Arizona State professor of law Gary Marchant said the use of AI has become more common in courts.

“If you look at the facts of this case, I would say that the value of it overweighed the prejudicial effect, but if you look at other cases, you could imagine where they would be very prejudicial,” he told AZFamily.

Could you imagine how prejudicial such a thing might be? Not here, of course. /S

Jury duty would be a lot more fun if trials were narrated by the Unsolved Mysteries guy

The trial was already over. This was for the sentencing.

So the original comment is just dumb because they couldn’t be bothered to read the article, but upvotes it gets.

Eww, that’s such a ghoulish thing to do; letting a distortion of a dead person, that could never act as the deceased person, forgive their killer. Do they even know if he would’ve done this if he had a say before being killed?