i absolutely hate how the modern web just fails to load if one has javascript turned off. i, as a user, should be able to switch off javascript and have the site work exactly as it does with javascript turned on. it’s not a hard concept, people.

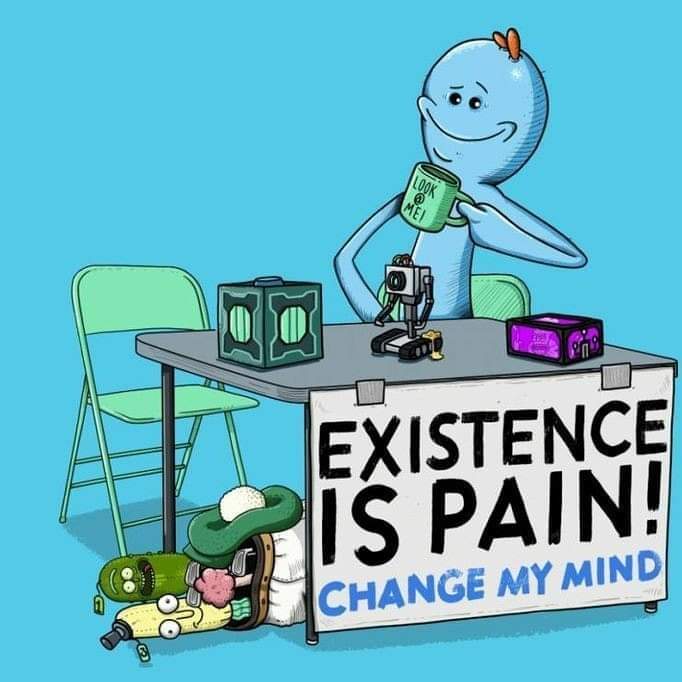

but you ask candidates to explain “graceful degradation” and they’ll sit and look at you with a blank stare.

It’s when you call someone a pathetic bottom bitch while wearing an evening gown.

I built an internal tool that works with or without js turned on, but web devs want something simple for them with a framework, which is why you have to download 100Mb just for a basic form page.

Funny, from my standpoint, more functional JavaScript almost always feels like service degradation - as in, the more I block, the better and the faster the website runs.

personally I think this is mostly due to for some reason people tend to give up on visiting a website if it takes more than a second or two to load(guilty as charged though), so instead they load a mostly blank page (which gives the sign that its loading) and then use javascript to load the rest of the content in.

that and fucking ads galore

that and fucking ads galore

And trackers.

And Javascript that give you the time in the page, as if you didn’t have a clock on your desktop.

And Javascript that give you a fake chat window to talk to a shitty AI nobody wants in the bottom-right corner.

And Javascript to annoy you with GDPR shit everybody absent-mindedly clicks away anyway.

And Javascript to inform you that the site uses cookies, as if it mattered since it won’t work without cookies.

And Javascript that nags you for a subscription or stops you scrolling to force you to create an account.

…And of course, all that is done by loading megabytes and megabytes of shit recursively from a kajillion nested addresses because web “developers” couldn’t code tight code if their lives depended on it. All they do is import pre-chewed shit that acts as trojans for big data players to plant more trackers and more ads in your browser, just to serve up barf people by and large don’t give a shit about.

Fair, some websites do need JavaScript though. Such as webapps. Could they be server-side rendered?

Yes. Web apps existed before JavaScript.

Depending on the web app, the real solution would be a much more simplified JavaScript free version

SSR is a thing and could be used to render most content remotely without pissing off readers.

They know ssr is a thing which is why they used that term. But ssr produces a static page or static component, where webapps often require some level of interactivity for their basic functionality, such as reacting to server events. They’re asking if that can be achieved with ssr

Some surely know, they just don’t care.

I wrote my CV site in React and Next.js configured for SSG (Static Site Generation) which means that the whole site loads perfectly without JavaScript, but if you do have JS enabled you’ll get a theme switching and print button.

That said, requiring JS makes sense on some sites, namely those that act more like web apps that let you do stuff (like WhatsApp or Photopea). Not for articles, blogs etc. though.

requiring JS makes sense on some sites, namely those that act more like web apps that let you do stuff (like WhatsApp

I mean yes, but Whatsapp is a bad example. It could easily use no JavaScript. In the end it’s the same as Lemmy or any other forum. You could post a message, get a new page with the message. Switching chats is loading a new page. Of course JavaScript enhances the experience, makes it more fluid, etc, but messengers could work perfectly fine without JavaScript.

How would a page fetch new messages for you without JS?

You don’t. That’s the gracefull degradation part. You can still read your chat history and send new messages, but receiving messages as they come requires page reload or enabling js.

my only issue with this ideology(the require page load) is, this setup would essentially require a whole new processing system to handle, as instead of it being sent via events, it would need to be rendered and sent server side. This also forces the server to load everything at once instead of dynamically like how it currently does, which will increase strain/load on the server node that is displaying the web page, while also removing the potential of service isolation between the parts of the web page meaning if one component goes down(such as chat history), the entire page handler goes down, while also decreasing page response and load times. That’s the downside of those old legacy style pages. They are a pain in the ass to maintain, run slower and don’t have much fallover ability.

It’s basically asking the provider to spend more to: make the service slower, remove features from the site (both information and functionality wise) and have a more complex setup when scaling, to increase compatibility for a minor portion of the current machines and users out there.

this is of course also ignoring the increase request load as you are now having to resend entire webpages to get data instead of just messages/updates too.

The web interface can already be reloaded at any time and has to do all of this. You seem to be missing we’re talking about degradation here, remember the definition of the word, it means it isn’t as good as when JS is enabled. The point is it should still work somehow.

Just to make sure we are on the same page then, cause I don’t see the issue with my post.

I am using the term “Graceful Degradation” which is meant as a fault tolerance for tech stacks to allow for a critical component to be removed.

This critical component people are talking about is Javascript which is used for all dynamically loaded content, and used for fallover protection so one service going down doesn’t make it so the entire page goes down (also an example of fault tolerance).

The proposed solution given would remove that fault tolerance for the reasons I provided in the original reply, while degrading the users experience due to increased page load time (users reloading the page inconsistently vs consistently to get new information) and increasing maintenance costs and overhead on the provider.

Additionally, the new processing system that you mentioned already exists generally doesn’t, because they(websites) mostly use a dynamic load style nowadays, not a static(as in the client doesn’t change it) page, which is what this type of system would require.

note: edits were for phrasing, and a typo

Maybe I’m out of the loop because I do mostly backend, but how do you update the chat window when new chats come in, without JavaScript?

You don’t, I’m saying it would still mostly work. Getting messages as they arrive is nice but not necessary. For example, I personally have all notifications off, and I only see messages when I specifically look for them, no one can reach me instantly. Everyone seems to be missing that we’re talking about degradation here, it degrades, it gets worse with JS disabled. But it shouldn’t straight up not work.

A good example for something that does not work without JS would have been a drawing application like they said, or games, there are plenty of things that literally do not work without JS, but messaging is not one of them. Instant messaging would be of course.

I also feel like everyone seems to be missing that we’re taking about degradation, which isn’t usually “no js at all”, it’s some subset that isn’t supported. People use feature detection to find out of some feature is supported in the browser and if it’s not the they don’t enable the feature the depends on it.

For the chat example, you could argue that a chat can degrade into a bulletin board, but I’d argue that people use chat for realtime messaging so js is needed for the base use case.

If your webpage primarily just displays static information, then I agree that it should work without js or css. Like Wikipedia, or a blog, or news, or a product marketing page, or a forum/BBS.

But there is a huge part of the web that this simply doesn’t apply to, and it’s not realistic to have them put in huge effort to support what can only be a broken experience for a fraction of a percent of users.

How would you solve end-to-end encryption without JavaScript?

Love it when a page loads, and it’s just a white blank. Like, you didn’t even try. Do I want to turn JS on or close the tab? Usually, I just close the tab and move on. Nothing I need to see here.

React tutorial are like that. You create a simple HTML page with a script and the script generates everything.

I had to do a simple webpage for an embedded webserver and the provider of the library recommended preact, the lightweight version of react. Having no webdev experience, I used preact as recommended and it is a nightmare to use and debug.

Most don’t even know

@media (prefers-color-scheme: dark/light), rather cobble something with JS that works half of the time and needs buttons to toggle.Took me ages to find a snippet that has a manual dark mode toggle but in a way that works with prefer-color-scheme so by default it inherits your settings but you can overwrite it… It’s just not a priority for a lot of people or they’re ok with the site flashing or something.

A button to toggle is good design, it should just default to your system preferences.

Bookmarking this, so far I’ve cobbled my Dark/Light Mode switch together with Material-UI themes, but this seems like the cleaner way to do this that I’ve been searching for!

Also note

prefers-reduced-motionfor accessibility.

It is substantially harder to make a modern website work without JavaScript. Not impossible, but substantially harder. HTML forms are not good at doing most things. Plus, a full page refresh on nearly any button click would be a bad experience.

The web isn’t just HTML and server side scripting anymore. A modern website uses Javascript for many key essentials of the site’s operation. I’m not saying that’s always a good thing, but it is a true thing.

It is no longer a reasonable expectation that a website work with JavaScript disabled in the browser. Most of the web is now in content management systems that use JavaScript for browser support, accessibility, navigation, search, analytics and many aspects of page rendering and refreshing.

The web isn’t just HTML and server side scripting anymore. A modern website uses Javascript for many key essentials of the site’s operation.

which is why the modern web is garbage

I don’t know how you’re gonna get everything to work without JavaScript. You can’t do a lot of interactivity stuff without it.

I’ve had news articles not work without javascript. (unpaywalled as well).

I’ve spent the last year building a Lemmy and PieFed client that requires JavaScript. This dependency on JavaScript allows me to ship you 100% static files, which after being fully downloaded, have 0 dependency on a web server. Without JavaScript, my cost of running web servers would be higher, and if I stopped paying for those servers, the client would stop working immediately. Instead, I chose to depend heavily on JavaScript which allows me to ship a client that you can fully download, if you choose, and run on your own computer.

As far as privacy, when you download my Threadiverse client* and inspect network requests, you will see that most of the network requests it makes are to the Lemmy/PieFed server you select. The 2 exceptions being any images that aren’t proxied via Lemmy/PieFed, and when you login, I download a list of the latest Lemmy servers. If I relied on a web server for rendering instead of JavaScript, many more requests would be made with more opportunities to expose your IP address.

I truly don’t understand where all this hate for JavaScript comes from. Late stage capitalism, AI, and SAS are ruining the internet, not JavaScript. Channel your hate at big tech.

*I deliver both web and downloadable versions of my client. The benefits I mentioned require the downloaded version. But JavaScript allows me to share almost 100% code between the web and downloaded versions. In the future, better PWA support will allow me to leverage some of these benefits on web.

The matter is not javascript per se but the use companies and new developers do, if everyone used like you there would probably be no problem. A gazillion dependencies and zero optimization, eating up cpu, spying on us, advertisements…

And if you try and use an alternative browser you know many websites won’t work.

Problem is so many websites are slow for no good reason.

And JS is being used to steal our info and push aggressive advertisment.

Which part is unknown to you?

I don’t understand why we are blaming the stealing info part on JavaScript and not the tech industry. Here is an article on how you can be tracked (fingerprinted) even with JavaScript disabled. As for slow websites, also blame the tech industry for prioritizing their bottom line over UX and not investing in good engineering.

Problem is so many trains are ugly for no good reason.

And steel is being used to shoot people and stab people aggressively.

Problem is so many websites are slow for no good reason.

Bad coding is a part of it. “It works on my system, where the server is local and I’m opening the page on my overclocked gamer system”. Bad framework is also a part of it. React, for example, decided that running code is free, and bloated their otherwise very nice system to hell. It’s mildly infuriating moving from a fast, working solution to something that decided to implements basic language features as a subset of the language itself.

Trackers, ads, dozen (if not hundreds) of external resources, are also a big part of it. Running decent request blocking extensions (stuff like ublock origin) adds a lot of work to loading a page, and still makes them seems more reactive because of the sheer amount of blocked resources. It’s night and day.

Yeah, it should also work without browser exactly as it does with a browser

They also continually forget that you can’t do frontend only validation for things.

I thought graceful degradation in terms of web design was mostly just to promote using the latest current browser features but to allow it to fall back to the feature set of, say, 1 or 2 previous browser versions. Not to support a user completely turning off a feature that has been around for literal decades? I think what you’re promoting is the “opposite” side, progressive enhancement, where the website should mostly work through the most basic, initial features and then have advanced features added later for supported browsers.

Not OP, But welcome to my TED talk.

Supporting disabled JavaScript is a pretty significant need for accessibility features. None of the text browsers supported JavaScript until 2017, and there’s still a lot of old tech out there that doesn’t deal well with it.

It wasn’t until the rise of react and angular that this became a big deal. But, It’s extremely common now to send most of the website as code. And even scrapers now support JavaScript.

There’s no “minor point” clause on the term graceful degredation. At the same time, there’s no minimum requirement. Would it be good to be thorough and provide a static page? I’d say yes but it’s not like anyone is going to do that anymore.

The tables have turned, You can no longer live without JavaScript and now you need browsers that lie about your screen resolution, agent and your plugins because mega corps can sniff who you are by the slightest whiff of your configs.

And that’s NOT pretty cool

Thanks for the response, good points all around. The fingerprinting is the most convincing argument to me but I think the accessibility issue you bring up is more important.